Upstage LLM, ‘Solar Mini’ performance report

2023/01/25 | 3 minsSmall but powerful, Upstage's own LLM model 'Solar Mini'

THE OVERWHELMING BEST AMONG MODELS UNDER 30B

The era of ‘Solar’, the LLM recognized by the world, has arrived. Solar, a Large Language Model (LLM) developed by Upstage, attracted attention last December by taking first place on Hugging Face's Open LLM leaderboard. Despite its compact size, Solar Mini provides answers similar to GPT-3.5 at 2.5 times faster speed. Here's how Solar Mini was able to dramatically reduce the size of LLM models while maintaining performance.

Solar’s strengths

Why you need a mini LLM

Size has become a critical factor in integrating Large Language Models (LLMs) into real-world applications. Using a smaller model can effectively reduce computation time and improve responsiveness and efficiency by reducing the time required to output results. Due to this effect, even when used for specific fields and services, the manpower and resources required for optimization are also reduced. Additionally, the smaller size also enables on-device AI by embedding LLM within the device, providing AI capabilities directly to the user's local device. Using LLM on-device not only improves accessibility but also reduces dependence on GPU resources, opening new avenues for introducing high-performance AI solutions at an affordable price.

Solar response speed

Compact size, powerful performance

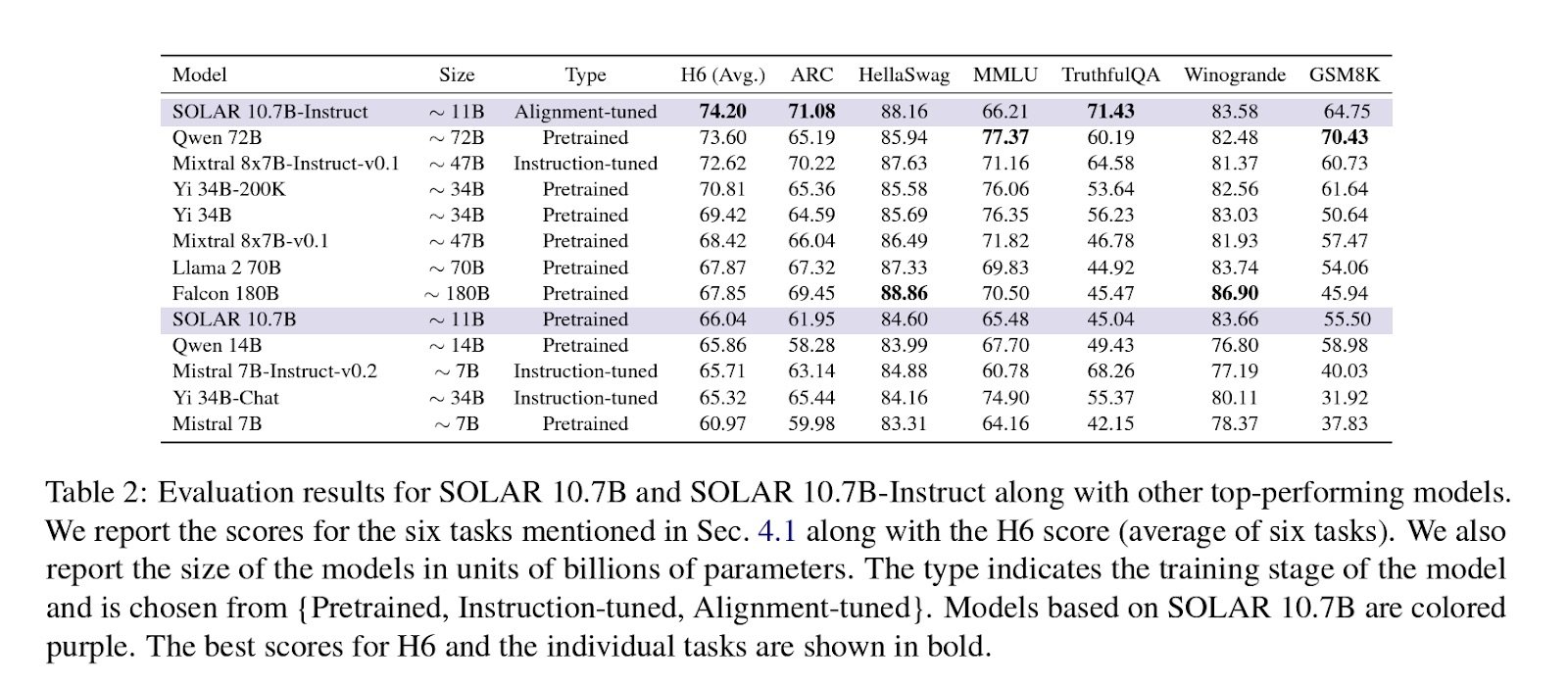

Solar Mini proves that you don't need large size for outstanding performance. It outperformed competitors including Llama2, Mistral 7B, Ko-Alpaca, and KULLM in various benchmarks.

Benchmark dataset evaluation results for Solar 10.7B and Solar 10.7B-Instruct models (Source: SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling)

Solar Mini construction process

basic structure

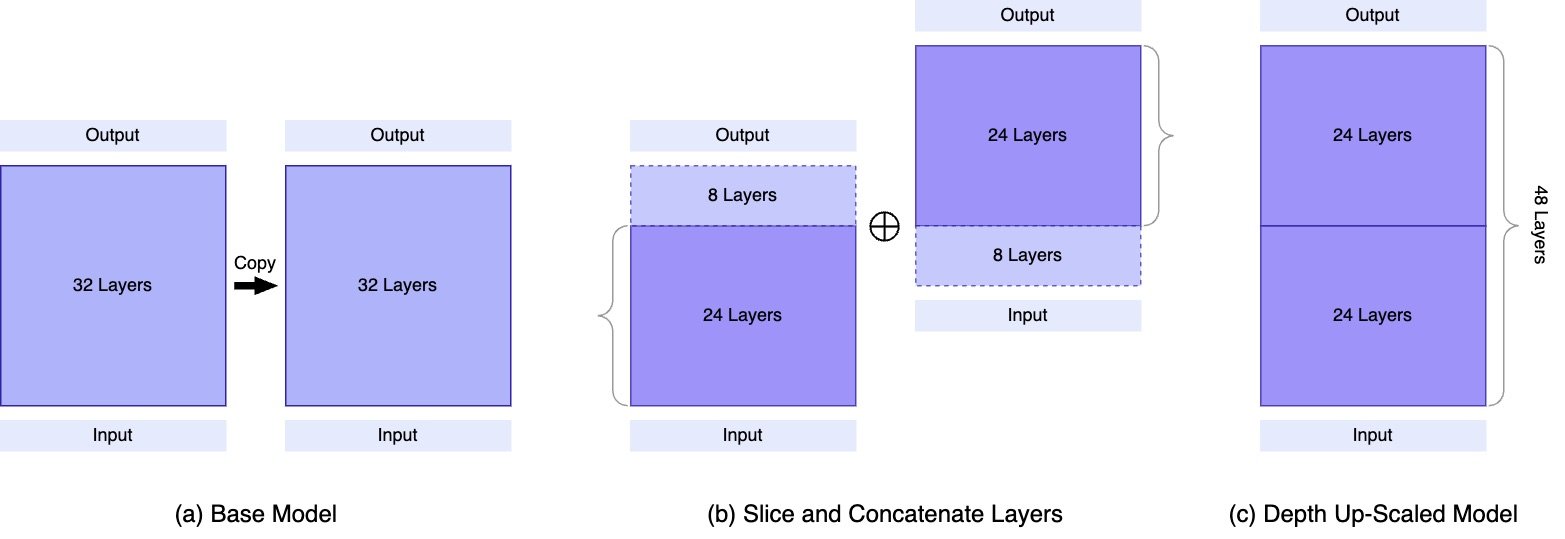

Solar Mini's base architecture is based on the 32 Layer Llama2 architecture, and it starts training with pre-trained weights from Mistral 7B, one of the highest performing models compatible with the Llama2 architecture.

How did Solar Mini manage to remain so compact yet so incredibly powerful? Our scaling method, ‘Depth Up-scaling’ (DUS), consists of depth-specific scaling and continuous pre-training. DUS enables scaling of small models much simpler and more efficient than other scaling methods.

Unlike Mixture of Experts (MoE), DUS does not require complex changes. It is directly compatible with no additional modules or dynamic components and can be applied to any transformer architecture and easy-to-use LLM frameworks such as Hugging Face.

Continued Pre-training

Immediately after depth up-scaling, the model's performance is lower than that of the base LLM. Therefore, continuous pre-learning is performed to restore the performance of the expanded model.

Instruction Tuning

At this stage, the model improves Korean language skills by performing Instruction Tuning specifically for Korean. This is a tuning process in a question and answer format to train the LLM to follow the instructions of the query exactly.

Alignment Tuning

The Instruction Tuned model is tuned so that it can provide an appropriate answer to the answer preferred by humans or existing strong LLMs.

Using Solar with Components

RAG

Solar Mini works especially well with Retrieval-Augmented Generation (RAG) systems. When the size of the LLM is large, the LLM tends to rely more on existing pre-learned knowledge to answer questions. The compact model Solar Mini leverages RAG more effectively to increase accuracy and relevance of output, enhancing accuracy and reliability.

A component that can be used with Solar Mini is the Layout Analyzer, which reads documents in their format. Extracting tables and figures from any document, this model processes PDF, PNG, and JPG data with OCR and layout analysis modules. By converting documents in complex formats into HTML in the direction and manner in which humans read them, they become data that can be directly entered into LLM.

finishing up

Solar Mini has the advantage of being applicable to various services and new business attempts based on its compact size. Please look forward to the development of versatile Solar, ranging from fast speeds to high domain and service applicability, and on-device possibilities.

Learn more: Solar Papers / Hugging Face / Poe

Solar Mini is publicly available under the Apache 2.0 License.

☀️ Solar L.L.M.

Try the cost-effective, lightweight yet powerful Solar Mini!

Are you curious about the performance and introduction method of Upstage’s small but powerful solar?