Open Source LLM Top 5 (2024)

2023/01/12 | 3 minsReceive the LLM 2023 monthly hot news summary report !

WE SUMMARIZE ALL THE LLM NEWS YOU NEED TO KNOW AND FUTURE TRENDS FOR 2024 IN ONE PLACE.

ADVANTAGES OF AN OPEN SOURCE LLM

AS INTEREST IN GENERATIVE AI INCREASES, THE LANDSCAPE OF THE ARTIFICIAL INTELLIGENCE MARKET IS CHANGING WITH THE EMERGENCE OF VARIOUS OPEN SOURCES. IT TURNS OUT THAT THERE ARE MORE THAN 10 FRONTIER LARGE LANGUAGE MODELS (LLMS) SCHEDULED TO BE RELEASED THIS YEAR. OPEN SOURCE LLM IS ATTRACTING ATTENTION FOR ITS ADVANTAGES SUCH AS ACCESSIBILITY, TRANSPARENCY, AND COST-EFFECTIVENESS. THESE CHARACTERISTICS ALLOW COMPANIES TO CUSTOMIZE AND USE IT ACCORDING TO THEIR NEEDS, AND THROUGH FINE TUNING, THEY CAN QUICKLY DEVELOP NEW MODELS WITHOUT LEARNING HUGE AMOUNTS OF DATA OR DEVELOPING THEIR OWN SYSTEMS. THANKS TO THESE STRENGTHS, VOICES IN THE INDUSTRY ARE GROWING THAT A HEALTHY OPEN SOURCE ECOSYSTEM MUST BE DEVELOPED IN ORDER TO DEVELOP AI TECHNOLOGY. LET’S TAKE A CLOSER LOOK AT THE BENEFITS OF AN OPEN SOURCE LLM BELOW.

- Fast and flexible development environment

: The community creates an environment where multiple people can contribute to the same project, exchange ideas, and solve problems, so bug fixes and technical issues can be improved quickly. This is also effective in terms of maintenance.

- High accessibility and scalability

: You can customize it to fit the purpose of your project by modifying or extending the code according to your needs.

- Provide education and research opportunities based on transparency

: THE TRANSPARENCY OF OPEN SOURCE LLM PROMOTES RELATED RESEARCH AND IS USED AS AN EDUCATIONAL RESOURCE FOR STUDENTS AND RESEARCHERS.

- cut down the money

: Utilizing open source software that can be used for free without developing your own system can reduce costs and time, and ease the burden of capital for startups and companies to develop new products and services.

LLM model adoption trend (Source: A Comprehensive Overview of Large Language Models )

The full-scale open source LLM craze began in February 2023 when Meta released LLaMa to the academic world. This led to the emergence of several 'sLLM' (small large language models) based on LLaMa. sLLM usually has 6 billion (6B) to 10 billion (10B) of parameters, which is much smaller than the existing LLM, but its performance is no less than that, so it has the strengths of low cost and high efficiency . Compared to OpenAI's 'GPT-3', which has 175 billion parameters, Google's 'LaMDA' (Lambda), which has 137 billion parameters, and 'PaLM' (Palm), which has 540 billion parameters, its efficiency can be felt even more.

SO WHAT ARE THE FIVE BEST OPEN SOURCE LLMS TO WATCH IN 2024?

Top 5 Open Sources to Watch in 2024

1. Llama 2

Source: Meta AI

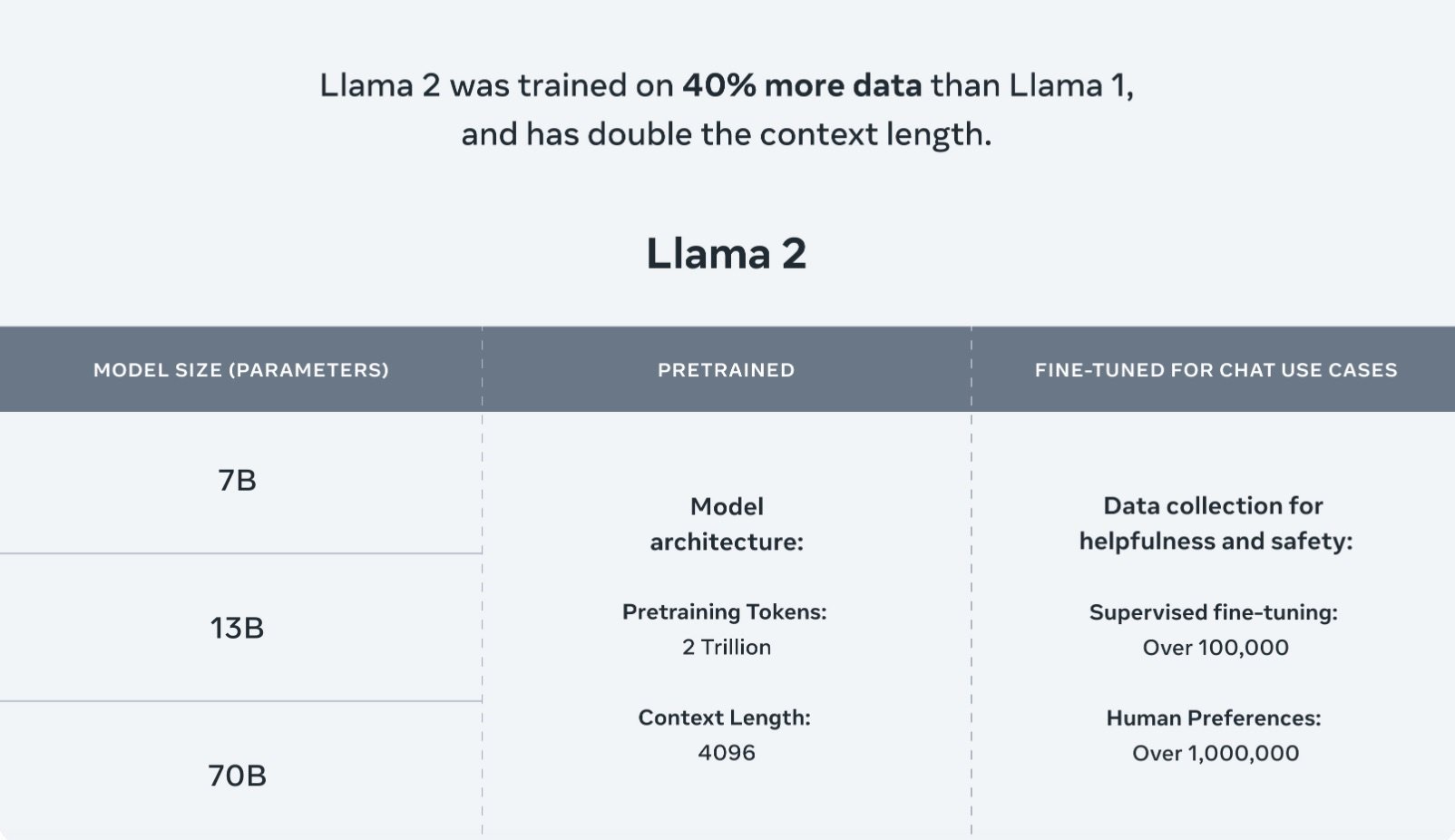

Llama 2 is an open source LLM developed by Meta AI and is one of the most popular open source LLMs. Llama 2 is the first commercial version of Llama and was released on July 18, 2023. Available in four sizes from 7B to 70B, Llama 2's pre-training data consists of 2 trillion tokens, which is larger than Llama 1.

Llama 2 leverages the standard transformer architecture and applies new features such as root mean square layer normalization (RMSNorm) and Rotary Positional Embedding (RoPE). Llama 2 chat starts with supervised fine-tuning and is improved through RLHF (Reinforcement learning from human feedback) . It uses the Byte Pair Encoding (BPE) algorithm and SentencePiece, the same tokenizer as Llama 1. We also pay attention to safety checks to address concerns about truthfulness, harm, and bias, which are important issues in LLM. It extends for fine-tuning across multiple platforms, including Azure and Windows, making it useful for a variety of projects.

LLaMA 2-Chat optimized for two-way conversation through reinforcement learning through human feedback (RLHF) and reward modeling (Source: Meta AI )

2. Mistral

Mistral-7B is a model released by Mistral AI and was created based on customized learning, tuning, and data processing methods. This model is an open source model provided under the Apache 2.0 license and is designed to be applied to real applications, providing efficiency and high performance. At launch, it also outperformed Llama 2, one of the best open source 13B models, in all benchmarks evaluated. This model demonstrates outstanding performance on a variety of benchmarks, including math, code generation, and inference.

Performance comparison of Mistral 7B and Llama models in various benchmarks (Source: Mistral 7B )

Mistral-Tiny models such as Mistral-7B are suitable for large-capacity batch processing tasks without complex calculations. This model is the most cost-effective for your application. Mistral-small (Mistral 8x7B Instruct v0.1) supports five languages, including English, French, Italian, German, and Spanish, and provides excellent performance in code generation. Mistral-medium is known to use a high-performance prototype model that surpasses GPT-3.5. This is suitable for high-quality applications.

3.Solar

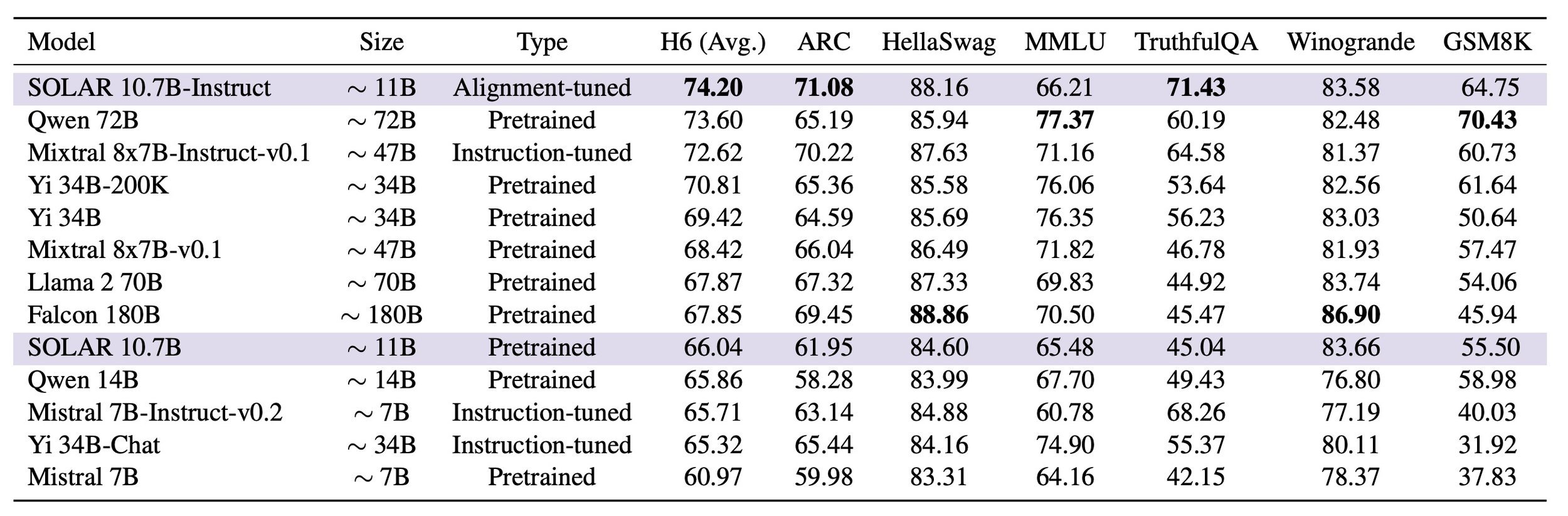

Solar is a small language model released by Upstage. With 10.7 billion parameters, Solar 10.7B is the latest and greatest open source LLM that performs better on essential natural language processing (NLP) tasks than existing models like Llama 2 and Mistral-7B while maintaining efficiency. In December 2023, it achieved first place in the 'Open LLM Leaderboard' operated by Hugging Face, the world's largest machine learning platform. This result is even more meaningful as it ranks as the world's best performing model with a size of less than 30 billion parameters (30B), which is the standard for small LLM (sLM).

To optimize the performance of the small-sized Solar model, Upstage used the 'Depth Up-Scaling' method. This is a method to find the optimal model size to combine the advantages between the 13B model, which has good performance but is large, and the 7B model, which is small enough but has intellectual limitations. Upstage uses its own Depth Up-Scaling method based on the open source 7B models. was applied to maximize the performance of the small model by adding layers and adding depth. Unlike mixture-of-experts (MoE), Depth Up-Scaling does not require complex changes to training and inference. This approach allowed Solar to launch at a scaled 10.7B with superior data from over 3 trillion tokens, with the optimal combination of size and performance.

In particular, Solar did not use the leaderboard benchmarking data set in the pre-learning and fine-tuning stages, but applied its own built data . This indicates that, unlike other models that directly apply benchmark sets to increase leaderboard scores, Solar can demonstrate high usability by being used for various tasks in actual work.

Evaluation results of SOLAR 10.7B and SOLAR 10.7B-Instruct and other language models (Source: SOLAR 10.7B: Scaling Large Language Models with Simple yet Effective Depth Up-Scaling )

4.Yi

Yi-34B was developed by Chinese startup 01.AI, which grew into a unicorn company with a valuation of more than $1 billion in eight months. The Yi series aims to be a multilingual model and utilizes high-quality 3T multilingual corpora for model training. It showed notable performance in language comprehension, common sense reasoning, and reading comprehension. Yi offers models in sizes 6B and 34B and can scale up to 32K during inference time.

According to Yi's GitHub, the 6B series model is suitable for personal and academic use, and the 34B series model is suitable for commercial, personal, and academic use. Yi series models use the same model architecture as LLaMA, allowing users to take advantage of LLaMA's ecosystem. 01.AI aims to become a leader in the generative AI market and plans to launch improved models and expand commercial products this year.

6.Falcon

Source: Technology Innovation Institute

Falcon is a generative large-scale language model launched by the Institute for Technology and Innovation in the United Arab Emirates (UAE). This model provides AI models with parameters 180B, 40B, 7.5B, and 1.3B. Falcon 40B is available royalty-free to both researchers and commercial users. It operates in 11 languages and can be fine-tuned to suit your specific requirements. Falcon 40B uses fewer training compute resources than GPT-3 and Chinchilla AI and focuses on high-quality training data. The 180B model has 180 billion parameters and is trained on 3.5 trillion tokens, providing excellent performance.

THE FUTURE OF AI OPENS WITH OPEN SOURCE LLM

NOWADAYS, IT IS DIFFICULT TO DISCUSS CHANGE AND INNOVATION IN THE AI MARKET WITHOUT LLM, AND LLM IS BEING APPLIED TO A VARIETY OF INDUSTRIES, GIVING BIRTH TO NEW IDEAS AND TECHNOLOGIES. THEREFORE, THE OPEN SOURCE LLM, WHICH PROVIDES AN ECOSYSTEM WHERE ANYONE CAN PARTICIPATE, IMPROVE, AND LEARN, WILL PLAY AN INNOVATIVE ROLE IN CHANGING THE FUTURE OF AI. ATTENTION WILL BE PAID TO WHAT OTHER SURPRISES AI TECHNOLOGY DEVELOPING THROUGH OPEN SOURCE LLM WILL BRING TO US THIS YEAR.

☀️ Solar LLM

[NEW] Upstage's Solar 10.7B

Introducing a new 10.7B model that outperformed all competing models on the Open LLM Leaderboard.

![[Press Photo] Upstage releases pre-learning LLM Solar… Participation in Global LLM Daejeon.jpeg](https://images.squarespace-cdn.com/content/v1/618b246f4dfdef45045a78dd/1702508852298-V88O6QBP5TCJVZP9KMI8/%5B%EB%B3%B4%EB%8F%84%EC%82%AC%EC%A7%84%5D%EC%97%85%EC%8A%A4%ED%85%8C%EC%9D%B4%EC%A7%80%2C+%EC%82%AC%EC%A0%84%ED%95%99%EC%8A%B5+LLM+Solar+%EA%B3%B5%EA%B0%9C%E2%80%A6+%EA%B8%80%EB%A1%9C%EB%B2%8CLLM+%EB%8C%80%EC%A0%84+%EB%B3%B8%EA%B2%A9+%EC%B0%B8%EC%A0%84.jpeg)