ECOSYSTEM OF OPEN SOURCE LLM AND KOREAN MODEL

2023/10/26 | 5 mins-

Haley (Content Communication)

-

ANYONE CURIOUS ABOUT TRENDS IN GENERATIVE AI AND OPEN SOURCE

THOSE WHO WANT TO KNOW ABOUT KOREAN LLM AND THE ECOSYSTEM

-

RECENTLY, THE LANDSCAPE OF THE ARTIFICIAL INTELLIGENCE (AI) MARKET IS CHANGING WITH THE EMERGENCE OF VARIOUS OPEN SOURCES. BY LOWERING THE THRESHOLD FOR LLM, WE LOOK AT 'OPEN SOURCE LLM', WHICH HAS EMERGED AS A NEW WAVE IN THE ERA OF GENERATIVE AI, AND WHAT IMPACT THIS WILL HAVE ON THE LLM ECOSYSTEM.

-

✔️What is open source?

✔️ EMERGENCE OF OPEN SOURCE LLM

✔️ MAJOR OPEN SOURCE LLM MODELS

✔️ IMPACT OF OPEN SOURCE ON THE LLM ECOSYSTEM

✔️ ‘Open Ko-LLM Leaderboard’ to strengthen Korean AI competitiveness

Recently, the landscape of the artificial intelligence (AI) market is changing with the emergence of various open sources. Since Meta opened LLaMa as open source for anyone to access, latecomers, excluding big techs such as OpenAI and Google, have tended to release their models as open source. In this insight blog, we will discuss what ‘open source LLM’ has emerged as a new wave in the generative AI era by lowering the threshold for LLM, and what impact this will have on the LLM ecosystem.

What is open source?

Open source software (SW) is used in many areas that are considered core technologies of the 4th Industrial Revolution, such as artificial intelligence, big data, cloud, and IoT. To understand the background of open source, we need to look at the early history of computer software. When computers were first developed, software was developed primarily in academia or research institutes, and the code was shared freely. However, as the commercial software market gradually grew, many companies began to make their code private, and in response to this trend, Richard Stallman in the late 1980s attempted to restore the information sharing method, which was the original production and distribution method of software. The ‘Free Software Movement’ began. Afterwards, as related foundations and associations were established, the term open source first appeared.

💡 What is open source?

: A term referring to software whose source code is open and anyone can freely review, modify, and distribute it. The license of each open source determines how users can use, modify, or distribute the software.

Open source is receiving great attention in the LLM market due to cost savings, especially as demand for language model development increases . This is because new models can be developed quickly by fine-tuning open source without learning large amounts of data or developing your own system.

💡 Advantages of open source

Fast and flexible development environment : Provides an environment where multiple people can exchange ideas and solve problems while contributing to the same project.

Extensibility : The code can be modified or expanded according to the user's needs and customized to suit the purpose of the project.

Cost reduction : Cost and time can be reduced by using open source software that can be used for free without developing your own system.

THE EMERGENCE OF OPEN SOURCE LLM

Open source LLM, which is rapidly emerging as a new keyword, began to attract attention with the appearance of many 'sLLM' (small large language models) using LLaMa in February of this year after Meta allowed access to LLaMa in academia. I did. sLLM usually has 6 billion (6B) to 10 billion (10B) of parameters, which is much smaller than the existing LLM, but its performance is no less than that, so it has the strengths of low cost and high efficiency . Compared to OpenAI's 'GPT-3', which has 175 billion parameters, Google's 'LaMDA' (Lambda), which has 137 billion parameters, and 'PaLM' (Palm), which has 540 billion parameters, its efficiency can be felt even more.

Mark Zuckerberg, CEO of Metaplatform, released LLaMa 2 as open source last July and said, “I believe that the more the ecosystem is opened, the more progress will be possible.” Companies that release open source like this have the view that the direction in which the industry will develop is by lowering the threshold for AI technology and creating an ecosystem where many organizations and developers can freely compete and create innovation .

KEY OPEN SOURCE LLM MODELS

SO WHAT ARE THE MAIN OPEN SOURCE LLM MODELS THAT ARE WIDELY USED?

LLaMA

LLaMA 2-Chat optimized for two-way conversation through reinforcement learning through human feedback (RLHF) and reward modeling

(Source: Llama 2: Open Foundation and Fine-Tuned Chat Models )

A representative example is Meta's 'LLaMA', which led to the popularization of open source LLM. LLaMA 2, a version that can be used commercially, was released on July 18, 2023. Reinforcement learning (RLHF) and reward modeling can be used to produce more useful and safer results such as text generation, summarization, and questions and answers. LLaMA 2 comes in three sizes: 7B, 13B, and 70B. There may be differences in the generation completion time for each model depending on the size of the parameters used in the model, but compared to the previous model, accuracy has been improved and aspects of preventing harmful text generation have been strengthened, and various platforms such as Azure and Windows have been strengthened. Fine tuning has also been expanded to allow for use in a variety of projects.

2.MPT-7B

Source: MosaicML Blog

MPT-7B (Mosaic Pretrained Transformers) is an open source LLM released by MosaicML and is a transformer trained with 1 trillion tokens . It is also commercially available, and in addition to the base model, there are three derivative models (MPT-7B-Instruct, MPT-7B-Chat, MPT-7B-StoryWriter-65k+) that can be built upon. MPT-7B is known to have equivalent quality to LLaMA-7B, Meta's model with 7 billion parameters.

3. Alpaca

Source: Stanford University

Alpaca is an open source model for academic research purposes released by Stanford University . Stanford students pointed out that even with the emergence of various models such as ChatGPT, Claude, and Bing Chat, incorrect information or harmful texts can still be generated. We believe that participation from academia is important to solve these problems and make technological progress, so we announced Alpaca to continue research on the model. Alpaca is based on Meta's LLaMA-7B and has been fine-tuned using instruction-following data that allows the language model to respond appropriately to user commands.

4. Vicuna

Source: LMSYS.org

Vicuna, created by LMSYS Org, is also based on LLaMA. It is said that a training set consisting of 70,000 user shared conversations collected from ShareGPT.com was used for fine tuning. According to the Vicuna team, preliminary evaluations using GPT-4 as a judge showed that Vicuna-13B achieved over 90% of ChatGPT and Google Bard quality, and the code provided with the online demo is free for anyone to use as long as it is for non-commercial purposes. It's possible to use.

5.Falcon _

Source: Technology Innovation Institute

Falcon is a model released by the Technology Innovation Institute in the United Arab Emirates (UAE), and the Falcon 40B is one of the representative open source models that can be used by both researchers and commercial users. The 180B model has excellent performance by using 180 billion parameters and learning with 3.5 trillion tokens.

IMPACT OF OPEN SOURCE ON THE LLM ECOSYSTEM

As we have seen so far, open source is being used in various areas due to its positive function of increasing accessibility and transparency of AI technology . Of course, there are also disadvantages, such as concerns about misuse. Nevertheless, the fact that open source AI has a positive impact on the development of the LLM ecosystem and that it can help organizations with relatively small capital compared to big tech to efficiently research and develop new models or services is what makes open source AI sustainable. It is a factor that makes it happen.

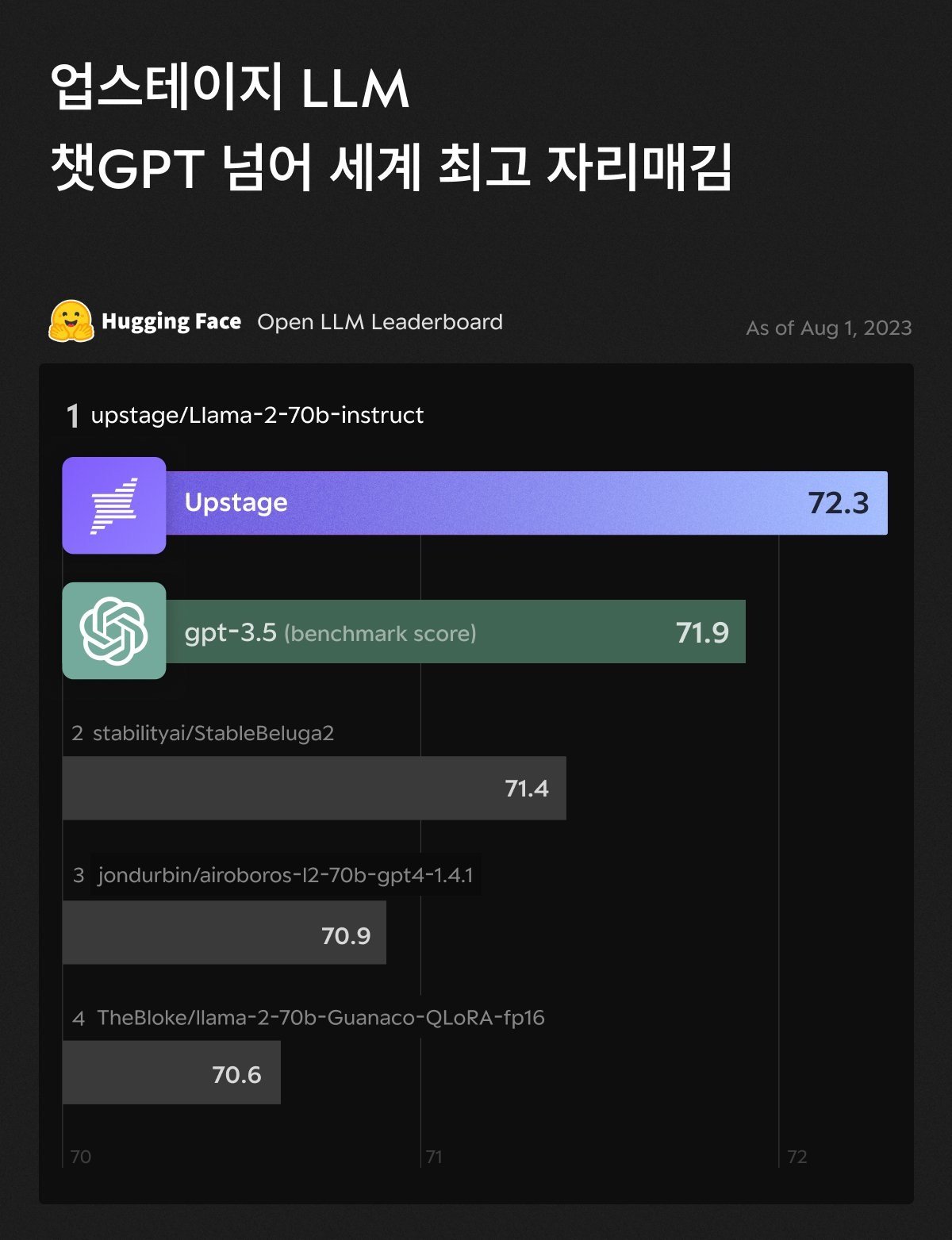

Thanks to this trend , 'Hugging Face', the largest open source platform in the field of natural language processing, also began to attract attention. Hugging Face operates the ‘Open LLM Leaderboard’, which allows users to compete by evaluating the performance of generative AI models developed by various companies and research institutes around the world. Here, more than 500 open source generated AI models are ranked based on evaluation of four indicators: reasoning, common sense ability, comprehensive language understanding ability, and hallucination prevention. This leaderboard is always open, so you can check the updated leaderboard to reflect the evaluation each time a new model is submitted.

Source: Hugging Face

In particular, among domestic companies, it became a hot topic last August when it was revealed that the generative AI model developed by AI startup Upstage surpassed the performance of GPT-3.5, the basis of ChatGPT, and took first place. Last July, Upstage released the 30B (30 billion) parameter model through Hugging Face and obtained an average score of 67 points, surpassing Meta's LLaMA 2 70B model announced on the same day and becoming the first LLM in Korea to reach first place . There have been some great achievements. Furthermore, they released a fine-tuned model based on LLaMA 2 70B (70 billion) parameters and recorded a leaderboard evaluation of 72.3 points, solidifying their global first place.

Based on the hugging face leaderboard, Upstage is the first to surpass the score of GPT-3.5, a synonym for generative AI models . Upstage's LLM model 'SOLAR', which proved its global competitiveness, utilized generative AI last September. She also became the main model on the platform ‘Poe’ . This has been recognized as having performance comparable to ChatGPT, Google Farm, Metarama, and Entropic Cloud, and is a representative example of proving that even startups with limited capital and manpower can develop a global top-level model by utilizing open source. no see.

THE GENERATIVE AI MODEL DEVELOPED BY KOREA'S LEADING AI STARTUP UPSTAGE SURPASSED CHATGPT AND TOOK FIRST PLACE IN THE HUGGING FACE OPEN LLM LEADERBOARD RANKINGS (23.08)

‘Open Ko-LLM Leaderboard’ to strengthen Korean AI competitiveness

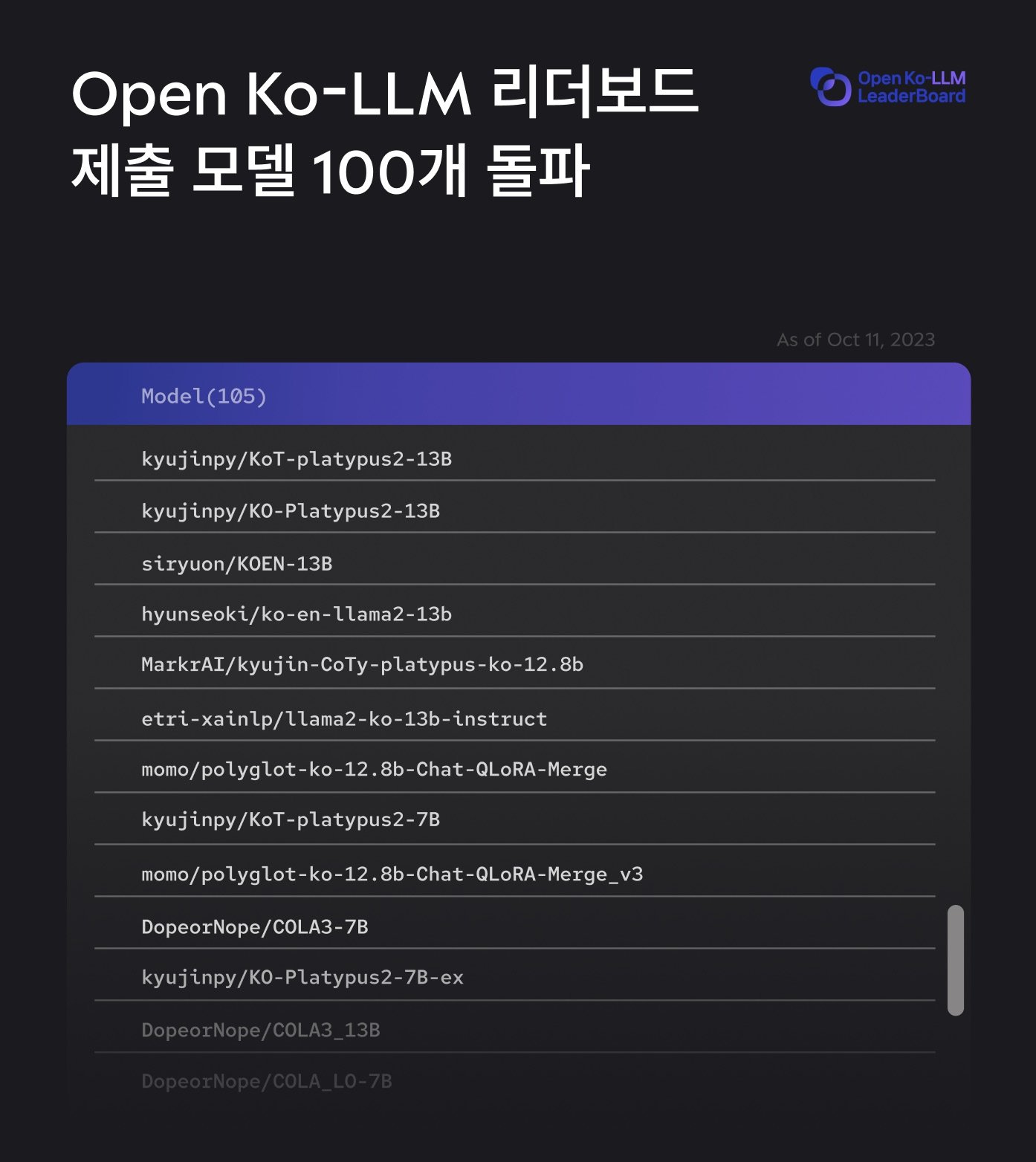

There is also a growing movement in Korea to expand the open source AI ecosystem. Upstage, which took first place in the Hugging Face Open LLM leaderboard, recently joined hands with the National Information Society Agency (NIA) to open the 'Open Ko-LLM Leaderboard'to evaluate and compare the performance of Korean LLM . The Open Ko-LLM Leaderboard is not a simple translation of the existing data of the OpenLLM Leaderboard operated by Hugging Face, but boasts its strengths as a Korean-specific leaderboard by constructing its own high-quality data that reflects the characteristics and culture of the Korean language . In addition, we added a criterion to evaluate the ability to generate common sense, allowing the model to be evaluated from various angles. By using this, cases such as ‘King Sejong’s MacBook throwing incident’, which is known as the most representative example of hallucination in Korea, can be greatly prevented, making Korean It is meaningful in that it allows us to compare and evaluate models that are more appropriate for history.

The Open Ko-LLM leaderboard is showing rapid expansion , with more than 100 registered models in just two weeks since its launch . In particular, with the gathering of existing famous Korean open source models such as 'Ko-Alpaca', Korea University's 'KULLM (Cloud)', and Polyglot-Ko', it has established itself as a barometer for Korean-specific LLM performance evaluation in the industry. This is expected to serve as a focal point for the domestic open source LLM camp along with the '1T Club', which presents a win-win model of profit sharing to partners who contribute more than 100 million words of Korean data for the independence of Korea's LLM.

IN THIS RAPIDLY EVOLVING GENERATIVE AI MARKET, WHAT KIND OF WAVES AND ADVANCEMENTS WILL OPEN SOURCE CAUSE? WE LOOK FORWARD TO THE INNOVATION THAT THE OPEN SOURCE CAMP WILL BRING NOT ONLY IN THE GLOBAL MARKET BUT ALSO IN KOREA.

-

-

Upstage is a leading domestic AI startup established in October 2020. Upstage stands out in the large language model (LLM) industry by taking first place on the Hugging Face leaderboard with performance exceeding ChatGPT's benchmark score for the first time in OpenLLM history. Based on these technologies, we present a reliable private LLM standard that maximizes data security and solves hallucination, helping companies conveniently use cutting-edge technology. In addition, Upstage's Chat AI 'AskUp' has over 1.4 million users, establishing itself as the largest AI service in Korea. Document AI Pack, another Upstage representative solution, utilizes AI OCR technology that has won the world's most prestigious OCR competition to automate documents by increasing efficiency and accuracy. By optimizing document processing through a pre-trained model with minimal data, cost and time are dramatically minimized compared to manual methods. Lastly, through the education program 'EduStage', we are also actively engaged in the educational content business that fosters differentiated professional talent who can be immediately put into AI business through hands-on education that incorporates AI business experience and solid AI basic education.

Upstage is comprised of members from global big tech companies such as Google, Apple, Amazon, NVIDIA, Meta, and Naver, and has participated in many world-renowned AI academic societies such as NeurlPS, ICLR, CVPR, ECCV, WWW, CHI, WSDM, and DMLR. We are solidifying our unrivaled leadership in AI technology by publishing excellent papers and becoming the only domestic company to win double-digit gold medals in the online AI competition Kaggle. While working as a professor at the Hong Kong University of Science and Technology, Upstage CEO Kim Seong-hoon won the ACM Sigsoft Distinguished Paper Award, the best paper award, four times for his research on bug prediction and automatic source code generation that combined software engineering and machine learning, and won 10 awards at the International Conference on Software Maintenance. He is considered a world-class AI guru who received the most influential paper award in 2018, and is also widely known as an instructor of 'Deep Learning for Everyone' with a total of more than 7 million views. Additionally, Upstage's co-founders include CTO Lee Tal-seok, who led Naver Visual AI/OCR and achieved world-class results, and CSO Park Eun-jung, who led the model team of Papago, the world's best translator.